Human in the Loop Software Development Agents

Atlassian’s HULA framework automates repetitive coding tasks, boosting developer efficiency while keeping engineers in control. Recognized by ICSE 2025, HULA is shaping the future of AI-assisted development.

Introducing HULA: Humans-in-the-loop LLM-based agents framework

Atlassian’s research on HULA has been accepted by the IEEE/ACM International Conference on Software Engineering, a prestigious software engineering conference.

Last year at Atlassian, our 12,000 engineers submitted more than 950,000 pull requests. From that work, we saw a huge opportunity to innovate, streamline the development process, and increase developer joy.

We bet that AI coding agents could help engineers solve a common blocker: repetitive, mundane, but necessary tasks that take up valuable time and energy. By using AI to speed up these tasks, we believed that engineers could focus on more complex and rewarding work.

Recently, we created the ‘Human-in-the-loop LLM-based agents framework’, or HULA. HULA reads a Jira work item, creates a plan, writes code, and even raises a pull request. And it does all of this while keeping the engineer in the driver’s seat. So far, HULA has merged ~900 pull requests for Atlassian software engineers, saving their time and allowing them to focus on other important tasks.

Our DevAI engineering and data science team collaborated with Associate Professor Kla Tantithamthavorn and Wannita Takerngsaksiri from Monash University and Dr. Patanamon Thongtanunam from The University of Melbourne to evaluate how HULA could integrate into an engineer’s workflow. HULA boosted our engineers’ efficiency, pushed the boundaries of what’s possible in AI-assisted software development, and represents a significant step in our mission to empower engineers and shape the future of coding.

As a result, our research has been accepted by the International Conference of Software Engineering (SEIP) 2025 (Link to the paper Arxiv). This recognition is a milestone for our team’s hard work and signifies HULA’s potential to transform software development in the age of AI in the near future. We are excited to share our findings in this blog and look forward to contributing to the ongoing AI-assisted software engineering research. This achievement not only demonstrates our commitment to innovation but also reinforces our belief in the collaborative synergy between humans and AI.

These insights come from an Atlassian research paper developed in collaboration with Monash University and the University of Melbourne. Credits goes to the Atlassian engineering, data science, product, and design teams who worked on this project.

Keen to try the latest version and be part of this AI innovation journey? Join our beta program recently announced at TEAM’25 via this link

The Hula process

Set context

Set context

The engineer chooses a simple Jira work item and enters relevant context (like repository info and task requirements).

Generate coding plan

Generate coding plan

HULA creates a coding plan, a list of files, and changes that will be made to complete the work item. The engineer then reviews the plan. They can make direct updates or give HULA feedback and regenerate the plan. Once the engineer is happy, they approve the plan and move on to coding.

Generate code

Generate code

HULA generates the code and incorporates feedback from tools like compilers and linters until the code passes validation. Again, the engineer must review the code, and they can make updates or give feedback before regenerating. Once the code’s ready, the engineer can can raise a pull request.

Raise pull request

Raise pull request

Once approved by the human, the code changes are compiled into a pull request on Bitbucket for review, following the team’s standard process.

Alternatively, the human can create a new branch from the code for further tweaks.

Performance

When working with agents powered by LLMs, it’s important to measure the quality and reliability of the output, as well as the experience of using the tool.

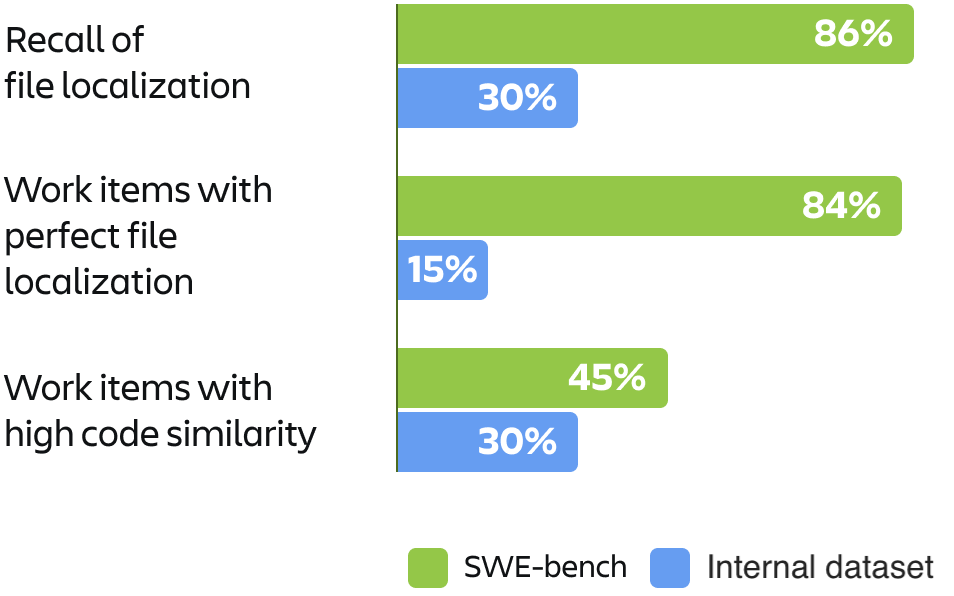

We used multiple quantitative methods to assess code quality (in September 2024). This included unit testing on SWE-bench verified datasets, where HULA passed unit tests for 31% of SWE-bench issues. We also used a GPT-based similarity metric to assess code similarity, and found that HULA was rated highly-similar to human code in 45% of cases.

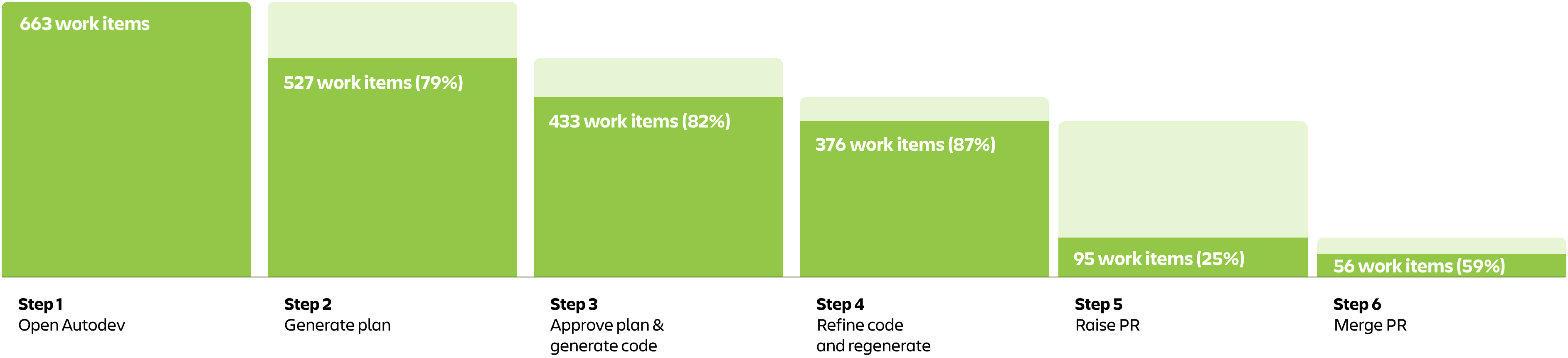

We also tracked how Atlassian engineers were using HULA in their work. Engineers used HULA on 663 work items in 2 months, and we found:

- HULA successfully generated coding plans for 79% of work items, and 82% were approved by the engineer.

- HULA generated code for 87% of approved plans, with 25% going all the way to the pull request stage.

- 59% of HULA-generated pull requests were merged into Atlassian code repositories.

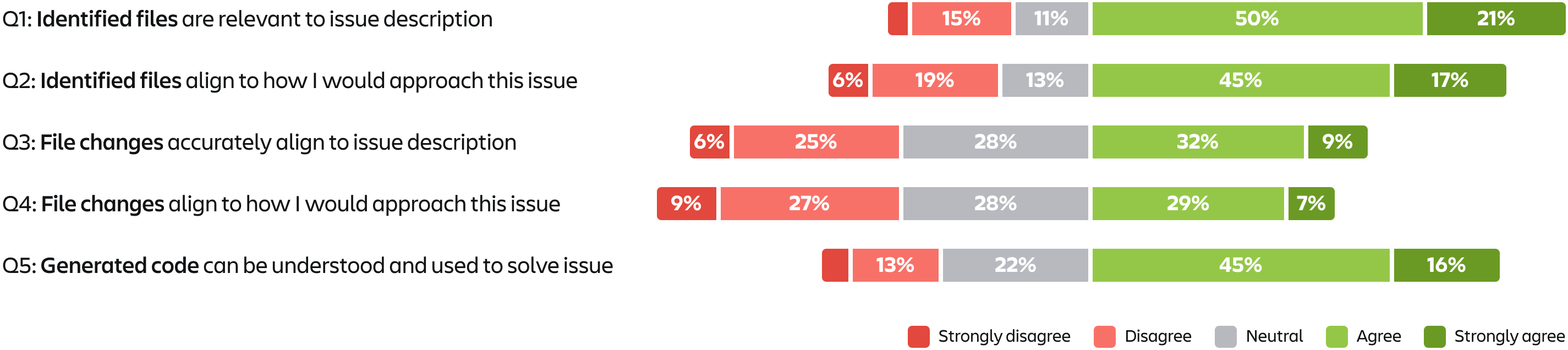

Finally, we surveyed 109 Atlassian engineers in September 2024 and asked them about their experience with HULA. Of these engineers, about 1 in 3 had over 10 years of engineering experience (28%), and almost all of them were already familiar with AI coding agents (93%). We found:

- 6 out of 10 of participants agreed HULA correctly identified the right files in the plan (62%), and 1 in 3 felt the coding plan aligned with the approach they would take (36%).

- 6 out of 10 of participants found the generated code was easily understandable (61%), and 1 in 3 agreed the code solved their work item (33%).

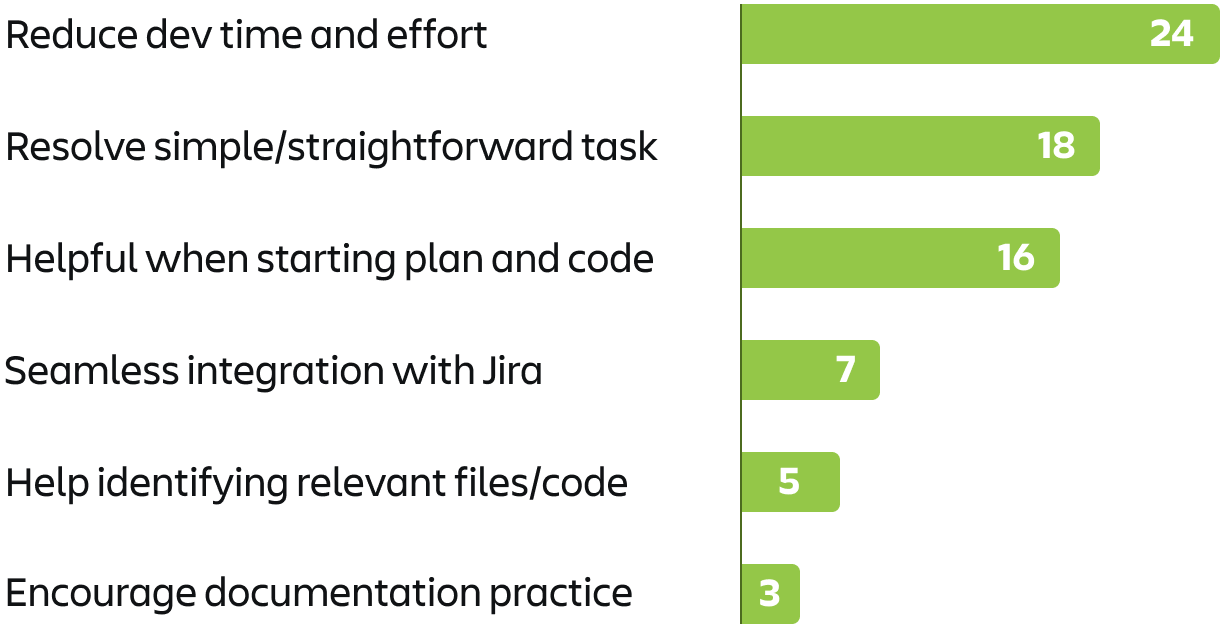

We also found a number of themes emerging from engineer feedback, including how useful HULA was in reducing development time and encouraging documentation. Engineers also felt that code functionality sometimes needed manual adjustment, some UX and UI improvements were required, as well as automating context setting and code validation.

Conclusion

Our research shows the potential for HULA to reduce development time, improve documentation practices, and help engineers automate repetitive tasks.

Our engineering team will continue to evolve HULA and build its expertise in specific use cases, as well as improve user experience, automate context settings, and improve code validation processes.

We want to ensure HULA exceeds engineer expectations of what an AI coding agent is capable of, and allow engineers to focus on the complex and valuable development work they do best.

Finally, we would like to acknowledge the important contributions of the DevAI engineering, data science, product, and design teams, whose collaborative efforts made both HULA and this publication possible.